When it comes to quality assurance (QA) testing distributed software systems, the simple fact of the matter is no amount of preproduction QA testing can unearth all the possible scenarios and failures that may crop up in your real production deployment. And here’s another fact: failures in production are inevitable – network failures, infrastructure failures, application failures. As such, you will see failures in production. It’s hardly a wonder, then, that the practice of Testing in Production (TiP) is gaining steam in DevOps and testing communities. However, rather than waiting around – totally unprepared – for failures to happen, only to deal with them after the fact, there is a certain TiP practice which dictates that engineers should intentionally inject failures into a distributed system to test its resilience and learn from the experience. This approach is known as chaos engineering.

Table of Contents

ToggleIn our previous post – ‘A Complete Introduction to Chaos Engineering’ – we explored the principles of chaos, where it can add value, why we need it, and how chaos engineering can be used to build safer, more performant and more secure systems. In today’s post, we’re going to build on what we’ve learnt so far, and consider the steps you’ll need to take when planning your first chaos experiment.

Chaos Engineering

In essence, chaos engineering is the practice of conducting thoughtful, carefully-planned experiments designed to reveal weaknesses in our systems. Let’s say you’ve developed a new web application – the latest and greatest thing that the whole world has been waiting for. You’ve done all the hard work, and now the time has finally come to launch the service to customers.

But how can you be sure – really sure – that the distributed system you’ve built is resilient enough to survive use in production? The truth is you can’t. Why? Because you don’t know what disasters may strike – outages, network failures, denial-of-service attacks. What’s more, no matter how hard you try, you can’t build perfect software – and the companies and services you depend on can’t build perfect software either.

So we’re back to the start again – failures in production are inevitable. You can’t control that. What you can do, however, is build a quality product that is resilient to failures – software that is able to cope with unexpected events, and ready and prepared for those inevitable disasters.

How? By deliberately making those disasters happen. By breaking things on purpose – not to leave them broken, but to surface unknown issues and weaknesses that could impact your systems and customers. With these weaknesses identified, you can then make your systems fault-tolerant and be fully prepared for when a real disaster strikes.

Chaos Engineering Experiments – What Do You Want to Know?

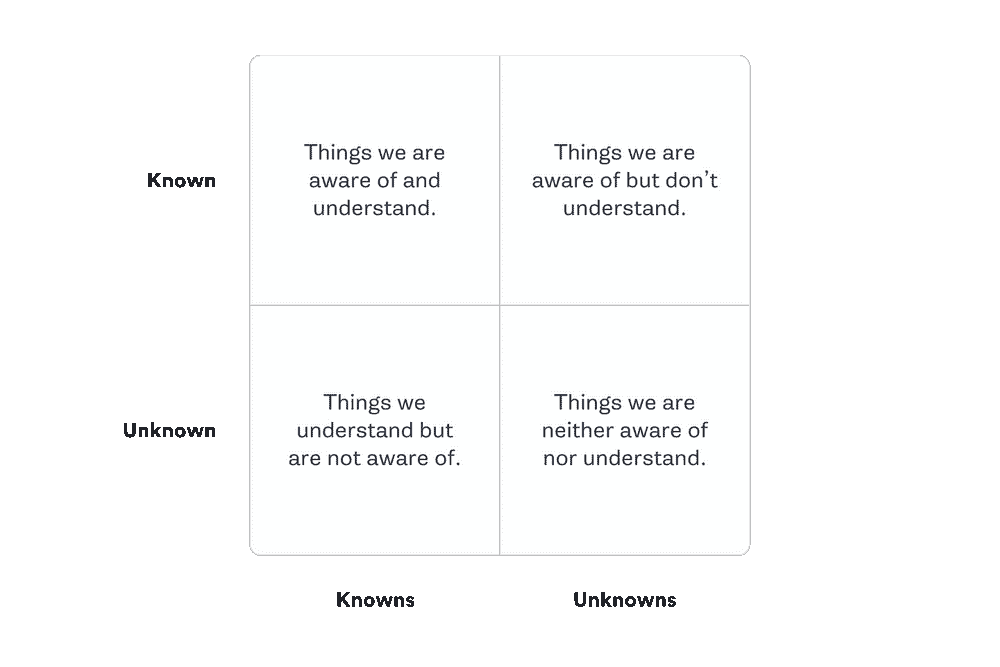

Failure as a Service company and chaos engineering pioneer Gremlin argues that chaos experiments should be conducted in the following order:

- Known Knowns – Things you are aware of and understand

- Known Unknowns – Things you are aware of but don’t fully understand

- Unknown Knowns – Things you understand but are not aware of

- Unknown Unknowns – Things you are neither aware of nor fully understand

(Image source: gremlin.com)

Ok, so how do you go about this?

Well, chaos experiments typically consist of four steps:

- Step 1 – Define the normal or “steady state” of the system, based on a measurable output, such as overall throughput or latency.

- Step 2 – Choose a failure to inject, and hypothesize what you think will go wrong – what will be the impact on your service, system, and customers?

- Step 3 – Isolate an experimental group, and expose that group to a simulated real-world event, such as a server crash or traffic spike.

- Step 4 – Test the hypothesis by comparing the steady state of the control group against what happened in the experimental group. You will be trying to verify (or disprove) your hypothesis at this stage by measuring the impact of the failure. This could be the impact on latency, requests per second, system resources, or anything else you’re testing for.

To put it even more simply – break things on purpose (on a small scale with an isolated experimental group), compare the measured impact of the injected failure, and then move to address any weaknesses that are uncovered.

Importantly, you must have a rollback plan in case things go wrong. For instance, if a key performance metric – such as customer orders per minute – starts to get severely impacted during the chaos experiment, you will need to abort the experiment immediately and return to the steady state as quickly as possible.

Chaos Experiment Examples

To give you a couple of examples. First, let’s say you want to know what happens if your MySQL database goes down. You can reproduce this scenario by running a chaos experiment. You might hypothesize that your application would stop serving requests, and instead return an error. You then simulate this event with an experimental group by blocking that group’s access to the database server. However, what you find is that, afterwards, the app takes an age to respond. You have here identified a previously unknown weakness – and, after some investigation, you will be able to find the cause and fix it.

What about network reliability? Your web application will most likely have both internal and external network dependencies. Internally, you should expect internal teams to maintain network availability – but what about your external network dependencies? How will your system react when it’s unavailable? You can test for this by running what’s called a “network blackhole” chaos experiment, which will make the designated addresses unreachable from your application. You may hypothesize that, during the chaos experiment, the traffic to the external network dependency goes to zero, but will be successfully diverted to your failback system – i.e. that your application continues to function normally (from a user standpoint) during the external network failure, and is able to serve customer traffic without the dependency. However, what you find is that this doesn’t happen – your application doesn’t start up normally, and you are not able to shield your customers from the impacts of the failure. You have successfully found a problem you need to fix.

Of course, when running a chaos experiment, you may find that your system is in fact resilient to the failure you’ve introduced. And this is an equally successful outcome. If your system is resilient to the failure, you’ve increased your confidence in the system and its behavior. And if, on the other hand, you’ve uncovered a problem you didn’t realize you had, you can fix it before it causes a real outage and impacts your customers.

Final Thoughts

There is so much to be learned from conducting chaos experiments, and the discipline of chaos engineering as a whole is gaining traction as one of the most robust and reliable ways of building resilient and stable software systems.

There are now open source tools to help you start conducting your own chaos experiments – the most well-known being the Simian Army from Netflix. Being one of the first notable pioneers of chaos engineering, Netflix’s Simian Army consists of several autonomous agents – known as “monkeys” – for injecting failures and creating different kinds of outages. For example, Chaos Monkey randomly chooses a server and disables it during its usual hours of activity, Latency Monkey induces artificial delays to simulate service degradation, and Chaos Gorilla will simulate an outage of an entire datacenter.

With or without such tools, get started with chaos engineering by compiling a list of chaos experiments. Determine how you want to simulate them, and what you think the impact will be. Pick a date for running the experiments, and inform all stakeholders that systems will be affected during a set time. Following the experiment, record the measured impact, and for each discovered weakness, make a plan to fix it. Going forward, be sure to repeat each chaos experiment on a regular basis to ensure your solutions remain solid, and to uncover any new problems. Go create chaos – your systems will be more resilient for it.

First Chaos Engineering Experiment

Chaos engineering is the practice of conducting thoughtful, carefully-planned experiments designed to reveal weaknesses in our systems. Failures in production are inevitable. You can’t control that. What you can do, however, is build a quality product that is resilient to failures – software that is able to cope with unexpected events, and ready and prepared for those inevitable disasters. Failure as a Service company and chaos engineering pioneer Gremlin argues that chaos experiments should be conducted in the following order: Known Knowns – Things you are aware of and understand. Known Unknowns – Things you are aware of but don’t fully understand. Unknown Knowns – Things you understand but are not aware of. Unknown Unknowns – Things you are neither aware of nor fully understand. chaos experiments typically consist of four steps: Step 1 – Define the normal or “steady state” of the system, based on a measurable output, such as overall throughput or latency. Step 2 – Choose a failure to inject, and hypothesize what you think will go wrong – what will be the impact on your service, system, and customers? Step 3 – Isolate an experimental group, and expose that group to a simulated real-world event, such as a server crash or traffic spike. Step 4 – Test the hypothesis by comparing the steady state of the control group against what happened in the experimental group. You will be trying to verify (or disprove) your hypothesis at this stage by measuring the impact of the failure. This could be the impact on latency, requests per second, system resources, or anything else you’re testing for.