What is information technology? It’s just computers, right? Well, yes, it is computers – but that hardly serves as a valuable definition. Information Technology (IT) refers to a spectrum of technologies, including software, hardware, communications technologies, and related services, used to create, process, store, secure, and exchange data.

Table of Contents

ToggleBut this modern-day spectrum hasn’t just appeared out of nowhere. Of course it hasn’t. Modern IT is the result of nearly six decades of consistent and impactful innovation. As such, to answer the “What is information technology?” question to a useful degree, we need to track its evolution to understand where it’s come from, where it’s at, and where it’s going.

What Is Information Technology? The 5 Evolutionary Stages of Modern IT

Basic computing devices, like the abacus, for instance, have been in use since at least 500 B.C. But the Z1, created in 1936 by Konrad Zuse, is considered to be the first functional and programmable modern computer. But even the Z1, an electromechanical computer, predates the electronic phase of modern information technology development, which has progressed through five distinct phases. Here’s a quick dive into each of those phases to help us answer the “What is information technology?” question, and understand how IT has changed and continues to evolve.

There are broadly five key stages in the evolution of information technology infrastructure – the centralized mainframe, personal computing, the client/server era, enterprise computing, and the cloud. There is currently a new computing revolution underway with advances in quantum computing opening up new possibilities for the progression of IT.

Computing Goes Mainstream with Mainframes

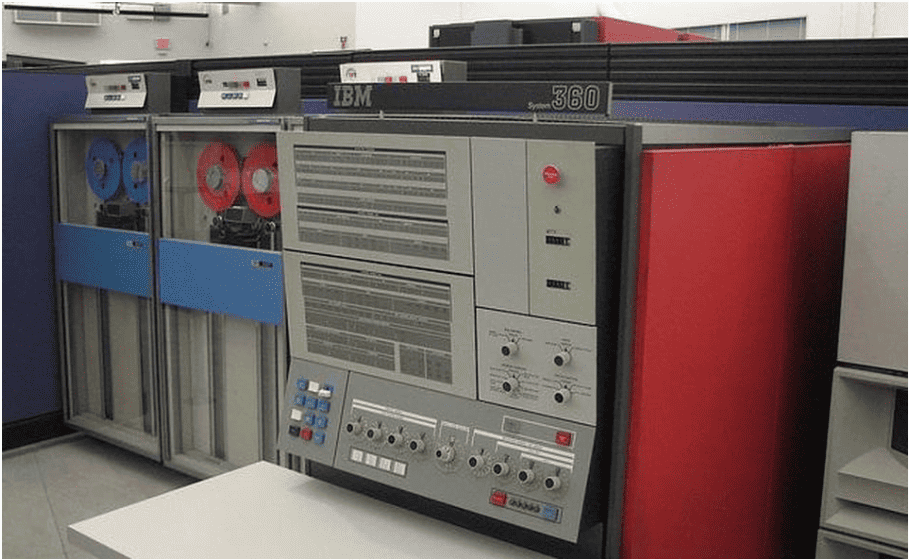

Launched in 1964, the IBM 360 is widely recognized as the world’s first modern mainframe. This was also the time that mainframes first started going mainstream. This was an era of highly centralized computing with almost all elements of IT infrastructure being provided by a single vendor. These powerful computers — the IBM 360 was capable of 229,000 calculations per second — were capable of supporting thousands of remote terminals connected through proprietary communication protocols and data lines. For many of the world’s largest enterprises, the mainframe provided the technological foundation for large-scale process automation and greater efficiencies.

(Image source: Forbes)

Though these systems served multiple users through client terminals and a time-sharing process, actual access was limited to specialized personnel. However, by the 80s, new mid-range systems with new technologies, like SUN’s RISC processor, emerged to challenge the dominance of mainframes.

If all that sounds rather archival, it’s important to note that mainframes have evolved considerably into platforms of innovation for modern digital businesses. The global market for mainframes continues to grow, at a CAGR of 4.3%, and is expected to reach nearly $3 billion by 2025, driven primarily by the BFSI and retail sectors.

This longevity in the somewhat ephemeral world of information technology stems from the fact that mainframes have evolved and adapted to contemporary compute models such as the cloud. In fact, 92 of the world’s top 100 banks and 23 of the top 25 retailers in the US still rely extensively on mainframes. Almost half of the executives in a 2017 IBM banking survey believed that dual-platform mainframe-enabled hybrid clouds improved operating margin, accelerated innovation, and lowered the cost of IT ownership. The latest Z-15 range from IBM continues to push the boundaries of mainframe capabilities with support for open source software, cloud-native development, policy-based data controls, and pervasive encryption.

Democratizing Computing with PCs

The signs of a shift from mainframes to decentralized computing first appeared in the 1960s with the introduction of minicomputers. These not only offered more power but facilitated decentralization and customization – as opposed to the monolithic time-sharing model of mainframe computing. But it would take until 1975 before the first genuinely affordable personal computer emerged in the form of the Altair 8800. The Altair was still not entirely a minicomputer for the masses as it required extensive assembly. However, the availability of practical software made it a hit among a growing number of computer enthusiasts and entrepreneurs. The 70s also saw the launch of the Apple I and II, but access was still predominantly restricted to the technologists.

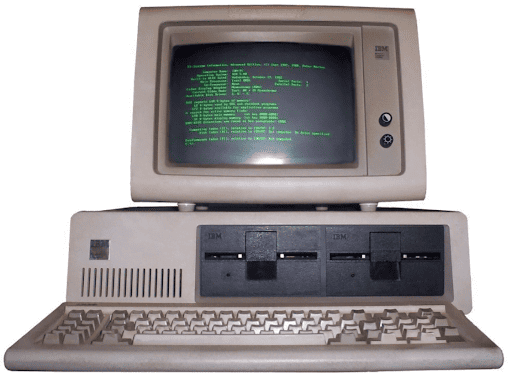

(Image source: Wikipedia)

It was the launch of the IBM PC in 1981 (pictured above), which is often seen as the beginning of the PC era. The company’s first personal computer, called the PC, used the operating system from a then little-known company called Microsoft and went on to democratize computing and launch many of the standards that have since come to define desktop computing. The IBM PC’s open architecture opened up the floodgates for innovation, drove prices down, and set the stage for a range of productivity software tools for word processing, spreadsheets, and database management that appealed to both the enterprise and the individual user.

In the early days, most of these PCs, even in the enterprise environment, were used as standalone desktop systems with no connectivity whatsoever due to the lack of requisite hardware and software for networking. That would not change until the 90s and the arrival of the internet.

Networking the PC through the Client-server and Enterprise Computing Eras

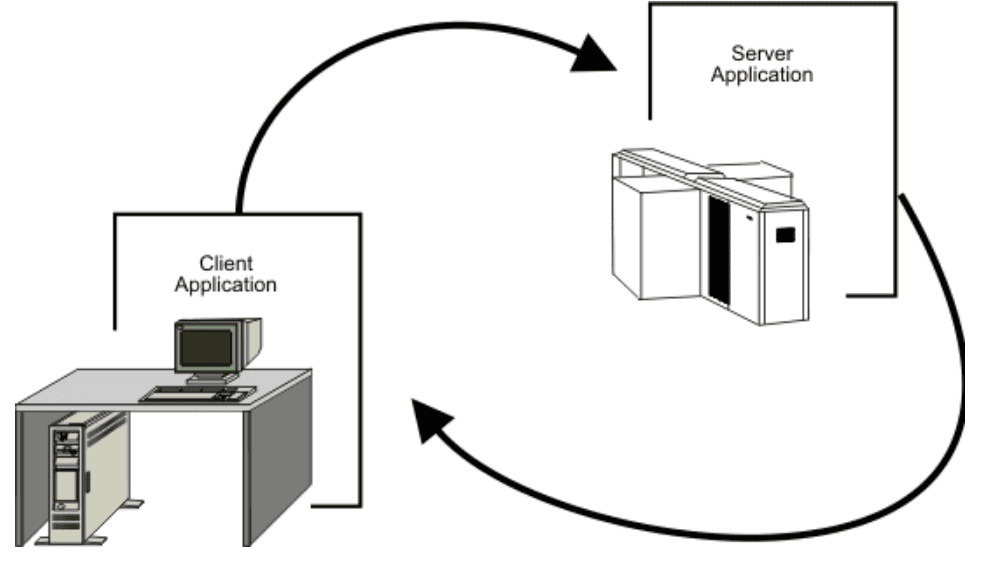

By the 80s, there was an increasing need for businesses to connect all their standalone PCs so that employees could collaborate and share resources. The client-server model afforded a way to enable truly distributed computing with PCs. In this model, every standalone PC is networked via a Local Area Network (LAN) to a powerful server computer that would enable access to a range of services and capabilities. The server could be a mainframe or a powerful configuration of smaller inexpensive systems that offer the same performance and functionality of a mainframe at a fraction of the cost. This development was spurred by the availability of applications designed to provide access to multiple users to the same functionalities and data at the same time. Almost all the collaboration and sharing enabled by client-server was restricted to within the enterprise, though data could still be shared across companies with some additional effort.

(Image source: IBM)

Today, the client-server model continues to be one of the central ideas of network computing. There are several advantages to this approach to networking, as the centralized architecture provides more control over the provisioning, access, and security of multiple services. The increasing popularity of the client-service model also provided a catalyst for the development of some of the first Enterprise Resource Planning (ERP) systems with a centralized database and a host of modules for different business functions such as accounting, finance, and human resources, to name a few.

In the 1990s, the client-server approach spilled over into the enterprise computing era, driven by the need to integrate disparate networks and applications into a unified enterprise-wide infrastructure. The increasing ubiquity of the internet meant that companies were soon turning to internet standards, such as TCP/IP and web services, to unify all their discrete networks and enable the free flow of information within the enterprise and across the business environment.

Disrupting Enterprise IT with Cloud Computing

In 1999, Salesforce launched an enterprise-level customer relationship management (CRM) application over the internet as an alternative for traditional desktop software. The term cloud computing was yet to enter the technological lexicon, though its coinage has since been tracked to late 1996 when executives at Compaq were envisioning a future where all business software would move to the web in the form of “cloud computing-enabled applications.” Three years later, Amazon would join the race by offering computational and storage solutions over the internet. These two events foreshadowed a transformative and disruptive trend in information technology wherein the core emphasis for enterprise IT would shift from hardware to software. Since then, almost every tech giant, including Google, Microsoft, and IBM, has joined the fray, and cloud computing has become the new normal for enterprise IT.

According to Gartner, enterprise information technology spending on cloud-based offerings will significantly outpace that of non-cloud offerings at least until 2022. The trend is so strong that the analyst has coined the term “cloud shift” to describe the market cloud-first preference when it comes to IT spending.

Cloud computing gives enterprise information technology on-demand access to computing resources, including networks, servers, storage, applications, and services, without the cost or hassle of managing and maintaining all that hardware. There is also an additional cost benefit to cloud services – the pay-as-you-go-model means that businesses are charged only for the resources they have consumed. Most importantly, cloud services can scale vertically and horizontally, eliminating all headaches associated with optimizing on-premise solutions for underutilization and overloads.

However, the cloud is not just a cost-centric alternative for on-premise systems; it can be leveraged as a critical driver of innovation. Take Artificial Intelligence and Machine Learning (AI/ML), for example, currently the go-to technologies for creating enterprise growth and competitive advantage. Any enterprise looking to leverage these technologies can either invest considerable resources, or they could just sign on for any of the MLaaS (machine learning as a service) offerings from their cloud service providers.

Today, most of the top cloud service providers offer a range of machine learning tools, including data visualization, APIs, face recognition, natural language processing, predictive analytics, and deep learning, as part of their as-a-service portfolio. For instance, AWS enables customers to add sophisticated AI capabilities – such as image and video analysis, natural language, personalized recommendations, virtual assistants, and forecasting – to their applications without the need for AI/ML expertise. It is a similar theme with quantum computing, as we shall see in the next section.

IT Makes the Quantum Leap

Quantum computing, the next significant evolutionary milestone in the development of information technology, utilizes the properties of quantum physics to store data and perform computations. Though this is still a nascent technology, the race to supremacy in this emergent field is seriously underway. In September 2019, a research team from Google became the first to demonstrate quantum supremacy by performing a task that’s beyond the reach of even the most powerful conventional supercomputer – namely, perform a calculation that would take the supercomputer 10,000 years to solve in just three minutes and 20 seconds. However, this claim has since been countered with a hefty dose of skepticism from their main rival IBM. IBM voiced their reservations on whether the milestone had actually been reached or even if the whole concept of quantum supremacy was being interpreted correctly.

Meanwhile, IBM itself is aiming for “quantum advantage” – demonstrating quantum computing capabilities over a range of use cases and problems. In their view, demonstrating this capability in concert with classical computers is more important and relevant than commemorating moments of narrow supremacy.

Image source: IBM

However, such intense rivalry does help accelerate the evolution of quantum computing from academic possibility to commercial viability, with spending estimated to surge from $260 million in 2020 to $9.1 billion by the end of the decade. And all the big names in the tech world, including Microsoft, Intel, Amazon, and Honeywell, as well as some specialized quantum computing companies, including IonQ, Quantum Circuits, and Rigetti Computing, are now in the race for quantum leadership. Private investors, including VCs, are also joining the quantum rush, having funded at least 52 quantum-technology companies globally since 2012.

Enterprise interest is also on the rise with one IDC study reporting that many companies, particularly in the manufacturing, financial services, and security industries, were already “experimenting with more potential use cases, developing advanced prototypes, and being further along in their implementation status.” As a result of this interest, access to quantum computing services is expanding rapidly from specialized labs to the cloud, with many cloud service providers, including IBM, Microsoft, and Amazon, now offering quantum computing as a service.

So, are we headed for a post-silicon era in computing? Not exactly, and not any time soon anyway. There’s little doubt that the unique processing capabilities of quantum computing can open up new areas of opportunity. However, there will continue to be plenty of scenarios where classical computing proves to be more efficient and productive than its more advanced counterpart. As such, a classical-quantum hybrid approach will probably be the future of computing. Moreover, quantum computing still has to address a lot of limitations in terms of scope, scale, and stability before it can truly go mainstream.

Final Thoughts

What is information technology? It is an ongoing evolution of hardware, software, storage, networking, infrastructure, and processes to create, process, store, secure, and exchange all forms of electronic data in increasingly innovative ways. Information technology in modern times has been evolving at an increasingly blistering place over the past few decades. Though it is possible to structure this progression into five discrete stages, there are quite a few overlaps and crossovers, like in the case of the resilient mainframe, for instance. Today, information technology has become so pervasive and consequential that every company today is considered to be in the business of technology. Information technology stands poised at the cusp of going beyond the conventional mix of bits and silicon to a quantum level that will open up new opportunities and applications for computing.

Summary:

Information Technology Evolution

Technology (IT) refers to a spectrum of technologies, including software, hardware, communications technologies, and related services, used to create, process, store, secure, and exchange data. Modern IT is the result of nearly six decades of consistent and impactful innovation. There are broadly five key stages in the evolution of information technology infrastructure – the centralized mainframe, personal computing, the client/server era, enterprise computing, and the cloud. There is currently a new computing revolution underway with advances in quantum computing opening up new possibilities for the progression of IT. Launched in 1964, the IBM 360 is widely recognized as the world’s first modern mainframe. By the 80s, new mid-range systems with new technologies, like SUN’s RISC processor, emerged to challenge the dominance of mainframes. The signs of a shift from mainframes to decentralized computing first appeared in the 1960s with the introduction of minicomputers. These not only offered more power but facilitated decentralization and customization – as opposed to the monolithic time-sharing model of mainframe computing. But it would take until 1975 before the first genuinely affordable personal computer emerged in the form of the Altair 8800. It was the launch of the IBM PC in 1981 (pictured above), which is often seen as the beginning of the PC era. The company’s first personal computer, called the PC, used the operating system from a then little-known company called Microsoft and went on to democratize computing and launch many of the standards that have since come to define desktop computing. By the 80s, there was an increasing need for businesses to connect all their standalone PCs so that employees could collaborate and share resources. The client-server model afforded a way to enable truly distributed computing with PCs. In this model, every standalone PC is networked via a Local Area Network (LAN) to a powerful server computer that would enable access to a range of services and capabilities. Today, the client-server model continues to be one of the central ideas of network computing. In 1999, Salesforce launched an enterprise-level customer relationship management (CRM) application over the internet as an alternative for traditional desktop software. The term cloud computing was yet to enter the technological lexicon, though its coinage has since been tracked to late 1996 when executives at Compaq were envisioning a future where all business software would move to the web in the form of “cloud computing-enabled applications.” Three years later, Amazon would join the race by offering computational and storage solutions over the internet. Quantum computing, the next significant evolutionary milestone in the development of information technology, utilizes the properties of quantum physics to store data and perform computations. Though this is still a nascent technology, the race to supremacy in this emergent field is seriously underway.